ReCo – Automatically Processing Text Responses from Assessments

The projects of the ReCo team centre around text responses. For example, the ReCo software automatically assesses whether a response in the PISA test in correct. The team provides a range of apps from automatic and semi-automatic coding of responses to on-the-fly usage in the classroom without any evaluation.

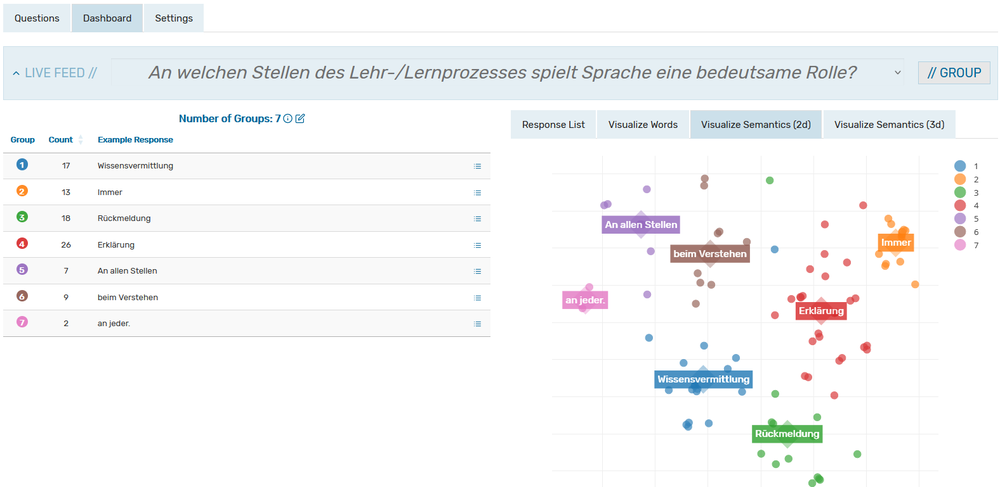

The ReCo team (Automatic Text Response Coding) works on a variety of projects for the TBA Centre at DIPF. They all centre around the automatic coding (e.g., scoring) of text responses. In both research and development we use state-of-the-art technologies that are often associated with artificial intelligence methods: natural language processing, large language models, and machine learning. The corresponding transfer products are aimed at supporting either research or educational practice. Multiple projects therefore offer freely available, interactive apps. Researchers can use these to automatically evaluate their text data from assessments (ReCo software). Teacher can collect questions from all learners live in class and have them automatically grouped on a dashboard (see figure).

The methodology behind the ReCo software has been developed, implemented and researched at the Technical University of Munich and the Centre for International Comparative Education Studies (ZIB) e.V. since 2015, an it is now being continuously modernised under the same project management at the TBA Centre. Since large-scale assessments such as PISA are among the most important fields of application for automatic coding, the continued cooperation with the German PISA National Centre at the Technical University of Munich and the ZIB is an important component of the projects.

Enhancing Educational Measurement

When constructing tests, research groups are often faced with the question which response format should be implemented. If they offer response options such as in the multiple choice format, responses can be easily processed. The downside is that this closed format entails guessing and other undersirable effects. But if the respondents can write their response into an open text field, the processing is often significantly complicated. At the same time, open response formats are better suited to assessing deeper understanding than closed ones for most domains, because the respondents are required to produce a response themselves rather than just recognising the correct one.

Therefore, the OECD already for PISA 2000 decided to include some tasks with open response format. The resulting effort for processing the text responses is particularly challenging for large-scale studies like PISA. Each participating country or region needs to train a team of coders diligently in order to evaluate the large amount of text responses from more than 500,000 students in total in the recent rounds. The coding team has guidelines for this task so that the coding takes place as objectively and comparable across languages as possible.

Because humans have subjective perspectives and cultural backgrounds, the risk of inconsistent coding needs to be considered. Owing to their lack of stamina, humans are also prone to errors when being exposed to such a large volume of data. Computer programs such as ReCo can improve consistency and, in turn, objectivity within as well as across languages. Also, the large volume of the data is not an obstacle for the computer guaranteeing further consistency at a large scale. This automatic coding of text responses can meanwhile be performed satisfactorily for a variety of data sets.

Funded International Research Projects: Scoring in PISA and Its Relationships with Linguistic Variance in ePIRLS

The ReCo team was recently able to acquire funding for two research projects aimed directly at the operational use of automatic scoring of text responses in established international large-scale assessments. The OECD-funded research project on the development and innovation of PISA involved the further development of its Machine-supported Coding System. Since 2018, this approach made it possible to automatically score certain answers that had already been scored several times by humans. This was done by simply using exactly matching responses. While this already led to a significant reduction in manual effort, its usability was severely limited because text responses in many tasks vary greaty in terms of spelling and wording as well as argumentation. In a collaboration with ETS, USA, and Sogang University, South Korea, the ReCo team has now taken the lead in further developing the methodology. This new method is called Fuzzy Lexical Matching (FLM). It allows successively more deviations from the exact match between responses, while corresponding steps are optimised for specific tasks and languages. This led to major savings in human coding without loss of accuracy, as has been demonstrated across a wide variety of languages (from Arabic, Hebrew, Korean and Kazakh to English, among others). This can save financial and time resources and substantially improve data quality through greater consistency. The OECD plans to use this automatic support operationally.

The second research project mentioned was funded by the IEA in order to investigate two research questions centring around its counterpart as the reading literacy measurement, ePIRLS. First, the accuracy of automatic scoring using the aforementioned FLM, but also modern semantic representations using large language models, was evaluated here. Second, however, the major purpose of the application of automatic scoring was to investigate which characteristics of tasks and test takers are related to the machine scoring accuracy. As previously hypothesised, it was shown that linguistic variance in resonses was the driving factor for difficulties in automatic scoring. Another key finding was that accuracy was largely independent of language, showing promising potential even for multi-lingual settings (see project report for further details). This project was carried out in cooperation with and under the leadership of Sogang University, South Korea, as well as FernUniversität Hagen.

ReCo-Live Enables All Learners to Participate in Class

Teachers regularly ask students questions in class. Often, a few learners com forward and only one of the formulates a response to the question. This can be effective for individual purposes in classroom discourse, but if all learners are to be mobilised, this format defeats its purpose. Instead, our ReCo-Live app makes it possible to collect the responses of all learners life in class by having them type in their responses on mobile devices. In this way, all learners participate and the classroom discourse is potentially more inclusive. All responses are then displayed on the teacher's dashboard and, if desired, grouped into just a few response types based on their semantic similarity (see figure above). Thus, the teacher can see typical responses as well as outstanding misconceptions or excellent responses, which can be used to advance the classroom discourse.

The project is being carried out in cooperation with the SchuLe team at DIPF and the LernMINT program at the Leibniz University Hannover.

The projects revolve around the automatic scoring of open-ended text responses, often from assessments or classroom discourse. The research projects aim to advance research through methodological development and relevant findings, while the transfer and infrastructure projects pursue the direct transfer into research and educational practice providing freely available software.

This project is funded from the institutional and third-party budget.

This project is being carried out in cooperation with...

- Technical University of Munich

- Centre for International Student Assessment (ZIB) e.V.

- International Association for the Evaluation of Educational Achievement (IEA)

- Organisation for Economic, Co-operation and Development (OECD)

- Educational Testing Service (ETS)

- Leibniz Universität Hannover

- FernUniversität Hagen

Status: Current projectArea of Focus Education in the Digital World Department: Teacher and Teaching Quality Unit: Technology-Based Assessment Education Sectors: Higher Education, Primary and Secondary Education, Science Duration: 04/2017 - 12/2027Funding: DIPFContact: Dr. Fabian Zehner, Post-doc Researcher